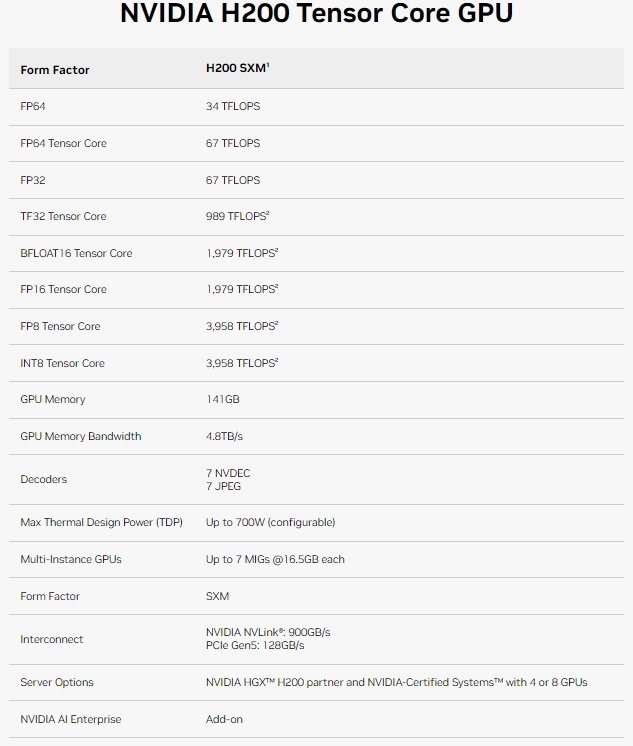

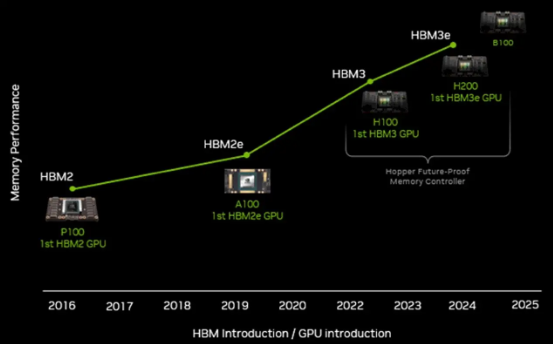

Thanks to the Transformer engine, decreased floating-point precision, and faster HBM3 memory, the H100, which has been fully shipped since this year, has already seen an 11-fold increase in inference performance on the GPT-3 175B model compared to the A100. With larger and faster HBM3e memory, the H200 can directly boost performance up to 18 times without any hardware or code changes. Even compared to the H100, the performance of H200 has increased by 1.64 times, purely due to the growth of memory capacity and bandwidth.

calm Flamingo_6328 : You don't look like a Thai person at all