Opportunities to Watch in Nvidia's Ecosystem as the Company Tops U.S. Market Cap

$NVIDIA (NVDA.US)$ has become the world's largest company by market capitalization, achieving a remarkable rise from a niche chip maker to a $3 trillion giant in just over a year. According to J.D. Joyce, president of Houston financial advisory Joyce Wealth Management, Nvidia's stock reflects strong investor sentiment about artificial intelligence, beyond just its earnings.

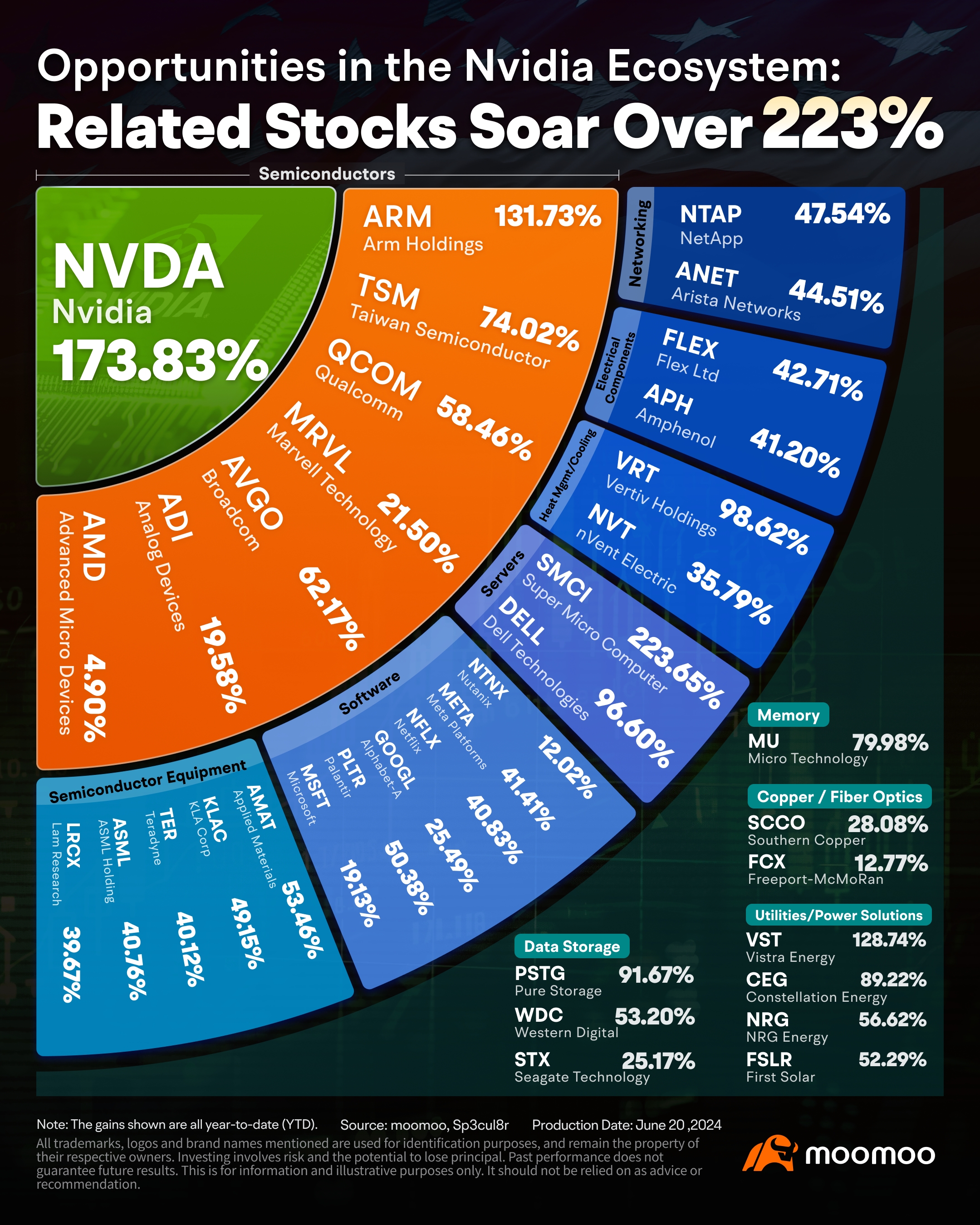

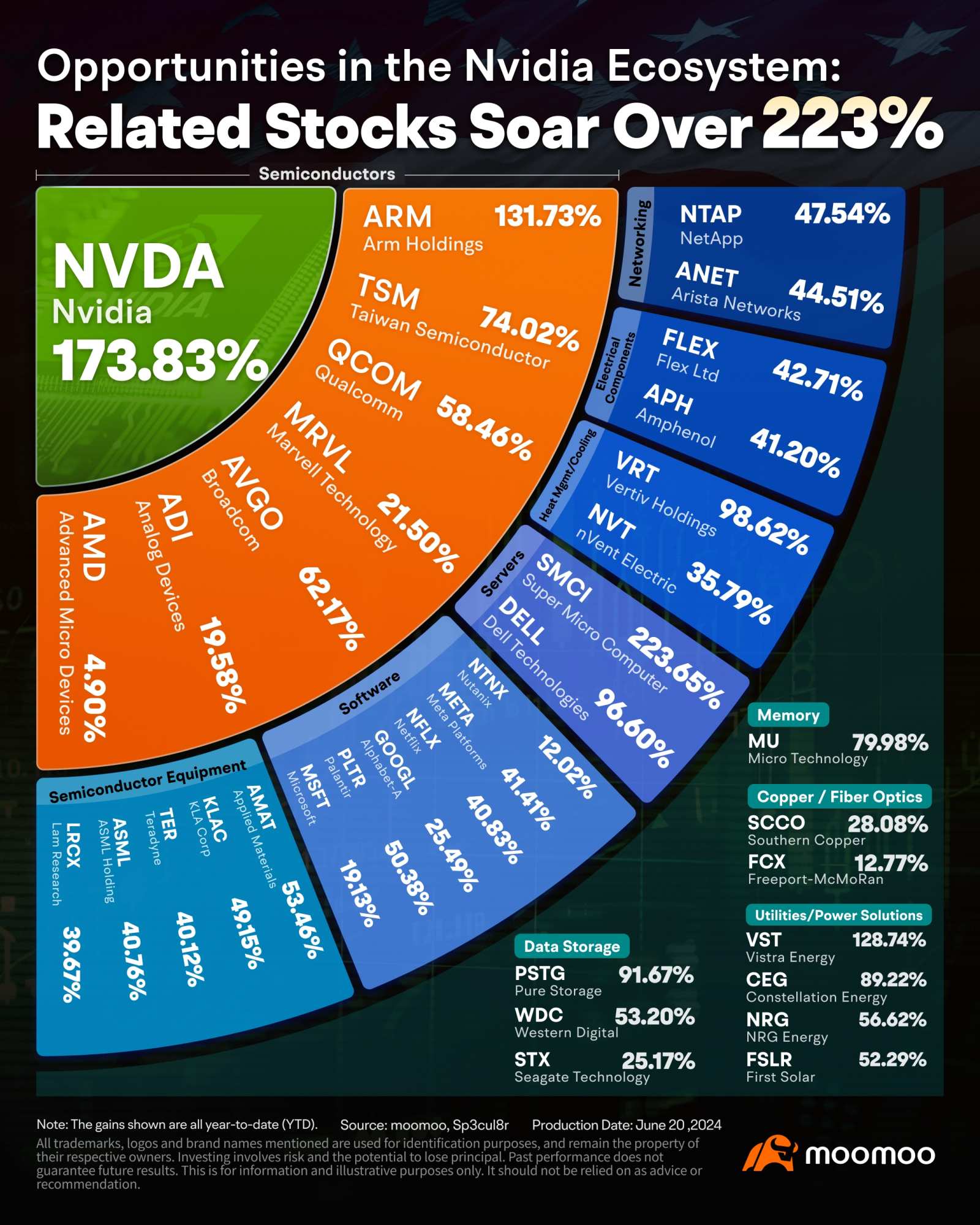

With the surge of Nvidia, the leading stock in AI, other AI-related stocks, particularly those within Nvidia's supply chain, have also seen significant increases. Companies like $Broadcom (AVGO.US)$, $Micron Technology (MU.US)$, and $Taiwan Semiconductor (TSM.US)$ are among them. Let's explore the opportunities worth watching.

Broadcom Is Coming for Nvidia Fast

Many of Nvidia's competitors aim to challenge its market dominance, with Broadcom frequently mentioned. A closer look reveals why: Broadcom's XPUs consume less than 600 watts, making them among the most power-efficient accelerators in the industry.

Bank of America recently named Broadcom a top AI pick, despite acknowledging Nvidia as the leader in GPU technology. Broadcom has announced a 10-to-1 stock split and reported better-than-expected second-quarter revenue, projecting $51 billion in sales for the 2024 fiscal year. Analysts forecast $59.9 billion in sales for 2025, driven by efficiency gains from Broadcom's VMWare acquisition and growth in custom chips.

While Nvidia currently holds a significant market cap lead and a near-monopoly in AI workloads with its CUDA architecture, Broadcom aims to present a viable alternative. It is offering custom AI accelerator chips (XPUs) and has seen growing demand, with plans to expand its clusters significantly. If successful, Broadcom’s market cap could join the ranks of tech giants in the Trillion Dollar Club.

Micron Upgraded on AI Memory Product Potential

Micron Technology designs and sells memory products that support AI workloads alongside GPUs. Analysts have a strong buy rating on the stock, with an average one-year price target of $145.05. In May 2024, Morgan Stanley upgraded Micron to Equalweight from Underweight and raised the price target to $130, citing benefits from advanced HBM memory products for AI.

Micron shares have surged 66% this year, but concerns persist about the upcoming earnings report due to an April earthquake in Taiwan and the absence of a positive preannouncement. Cantor Fitzgerald analyst C.J. Muse advises clients not to overthink it, raising his price target from $150 to $180, suggesting a potential 27% upside from current levels. He also attributes this optimism to the company's expected continued benefits from the AI boom, which has driven demand for high-bandwidth memory (HBM) products.

Micron's 8-high HBM3E was the first memory product to be qualified by Nvidia for its H200 products, and Muse expects updates on further platform qualifications during the earnings call. Muse anticipates May-quarter revenue of $6.70 billion, slightly above the $6.65 billion consensus, though he believes revenue could have been higher without the earthquake's impact. For the August quarter, he projects revenue guidance of $7.71 billion, surpassing the $7.56 billion consensus forecast by analysts tracked by FactSet.

Investors should also monitor gross margins, with the potential for favorable supply-demand dynamics to enhance pricing over the next few quarters. Muse notes that investor focus will be on the timing of a return to 40% gross margins, which some believe could happen as soon as August. Although Muse is less optimistic about this immediate timeline, he is confident that Micron will eventually achieve gross margins in the 50%-55% range, driven by what he expects to be a prolonged memory cycle.

TSMC's 3nm Supply Falls Short Amid AI Surge

Taiwan Semiconductor Manufacturing Company, the world's largest contract chip manufacturer, saw a significant boost in May 2024 due to increased global AI chip shipments. The company's revenue surged 30% to $7.1 billion. This growth coincides with $ASML Holding (ASML.US)$'s confirmation that it will ship its advanced high NA EUV scanner to TSMC, crucial for future manufacturing technologies. TSMC plans to upgrade to 2-nanometer chips by 2025.

The rapid evolution of the AI industry has created a massive demand for semiconductor resources, with TSMC being a primary supplier for companies like $Apple (AAPL.US)$, $Qualcomm (QCOM.US)$, NVIDIA, and $Advanced Micro Devices (AMD.US)$. Due to the high demand for TSMC's advanced 3nm chips, there is an imbalance in the supply chain, leading IC design companies to consider significant price increases. TSMC is expanding its capacity by shifting some 5nm production lines to 3nm, aiming for a monthly wafer output of 180,000 units. Future demand, including from $Intel (INTC.US)$'s upcoming Lunar Lake chips, could further strain supply, potentially leading to consumer price increases.

Dell & Super Micro Supplying xAI's Supercomputer Racks

$Dell Technologies (DELL.US)$ and $Super Micro Computer (SMCI.US)$ will provide server racks for the supercomputer being developed by Elon Musk's AI startup, xAI, as announced by Musk on the social media platform X. Dell is responsible for assembling half of the server racks, while Super Micro, known for its liquid-cooling technology and relationships with companies like Nvidia, confirmed its involvement.

Additionally, Dell CEO Michael Dell mentioned in a separate post on X that Dell is collaborating with Nvidia to build an "AI factory" intended to power the next version of xAI's chatbot, Grok. According to a report by The Information, Musk has informed investors that xAI's supercomputer will support future iterations of the Grok chatbot.

Training AI models like Grok involves using tens of thousands of power-intensive chips, which are currently in short supply. Earlier this year, Musk revealed that training the Grok 2 model required about 20,000 Nvidia H100 GPUs, and future models like Grok 3 will need around 100,000 Nvidia H100 chips. Musk aims to have the supercomputer operational by the fall of 2025.

Check Out More Companies Benefiting From Nvidia's Ecosystem:

Source: MarketWatch, Yahoo Finance, WCCFtech, REUTERS

Disclaimer: Moomoo Technologies Inc. is providing this content for information and educational use only.

Read more

Comment

Sign in to post a comment

Fox River : AMD![undefined [undefined]](https://static.moomoo.com/nnq/emoji/static/image/default/default-black.png?imageMogr2/thumbnail/36x36)

![undefined [undefined]](https://static.moomoo.com/nnq/emoji/static/image/default/default-black.png?imageMogr2/thumbnail/36x36)

![undefined [undefined]](https://static.moomoo.com/nnq/emoji/static/image/default/default-black.png?imageMogr2/thumbnail/36x36)

![undefined [undefined]](https://static.moomoo.com/nnq/emoji/static/image/default/default-black.png?imageMogr2/thumbnail/36x36)

![undefined [undefined]](https://static.moomoo.com/nnq/emoji/static/image/default/default-black.png?imageMogr2/thumbnail/36x36)

74756416 : good summary of the ecosystem

Paul Bin Anthony : tq My lord give me what's your help with this learn about the best person in the world with the one that before your come to our site country tq So much good will

72774369 : Now is a good time to sell! I've already cleared 126

151152697 Paul Bin Anthony : supercomputers

74617572 72774369 : 6142630923