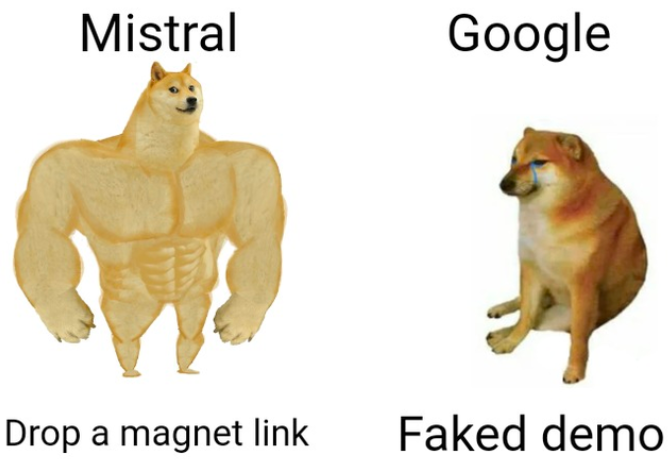

没有新闻发布会,没有宣传视频 – 只有一个磁力链接,让开发者们整夜难眠。

这个在法国成立的人工智能创业公司,上线后只分享了三篇内容。

今年六月,Mistral AI正式上线。一份包含7张幻灯片的演示文稿,为该公司获得了欧洲历史上最大规模的种子资金。

今年九月,Mistral 70亿正式发布,被誉为参数规模最大的开源模型。

去年12月,一个类似于GPt-4架构的开源版本的Mistral 8x70亿发布了。几天后,金融时报披露了Mistral AI最新的融资轮次达到$41500万,估值达到了惊人的$20亿,增长了8倍。

现在,只有20多名员工的该公司创下了开源公司历史上最快的增长纪录。