Speed is a double-edged sword

The AI community is popular, and the Internet is being screened!

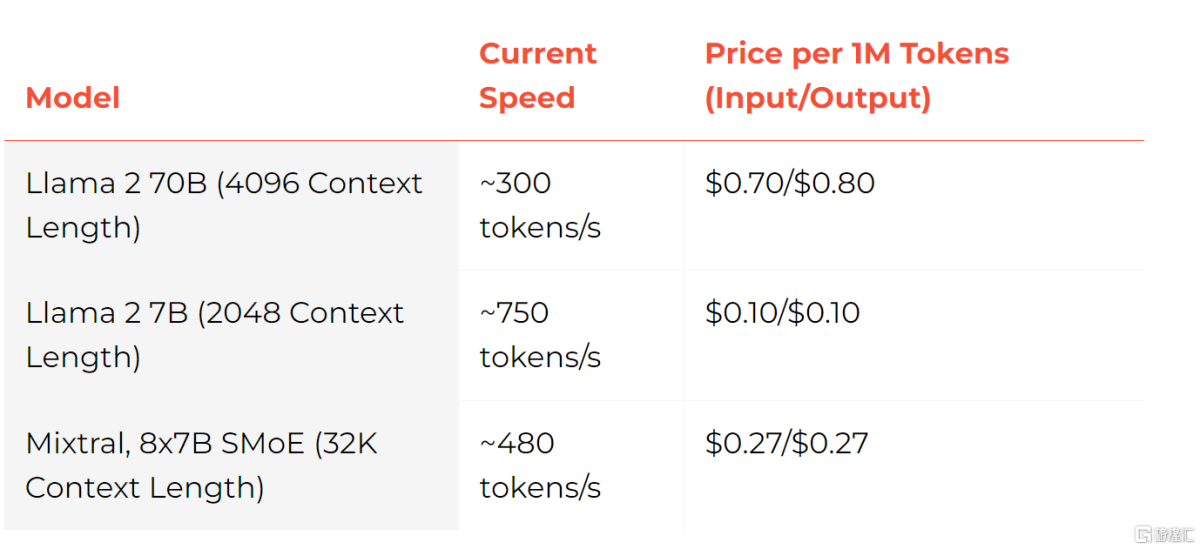

Recently, Groq sparked widespread discussion. Its large model can output 750 tokens per second, 18 times faster than GPT-3.5, and the self-developed LPU inference speed is 10 times faster than Nvidia GPUs.

Surprisingly fast

Groq's name is similar to Musk's big model Grok pronunciation. It was founded in 2016 and positioned as an artificial intelligence solutions company.

Groq exploded mainly because of its extremely fast processing speed. According to media reports,The company's chip inference speed is 10 times faster than Nvidia GPUs, and the cost is only 1/10.

The large model is running at a rate of nearly 500 tokens per second, crushing ChatGPT-3.5 at about 40 tokens/second.

At its extreme, Groq's Llama2 7B can even achieve 750 tokens per second, 18 times GPT-3.5.

In Groq's founding team, 8 people were from Google's early TPU core design team, but Groq did not choose TPU, GPU, CPU, etc., but instead developed its own language processing unit (LPU).

According to Groq's official website, Meta AI's LLama 270B running on the Groq LPU inference engine performs better than all other cloud-based inference providers, and has an 18-fold increase in throughput.

Can it replace Nvidia?

However, speed is not the only decisive factor in the development of AI. At the same time that Groq is trending, there are also voices of doubt.

First, Groq just seemed cheap.Groq's LPU card has only 230MB of memory and costs over $20,000.

Some netizens analyzed that the Nvidia H100 should be 11 times more cost-effective than Groq.

More importantly,Groq LPU is completely unequipped with high bandwidth memory (HBM),Instead, it is equipped with only a small block of ultra-high speed static random access memory (SRAM), which is 20 times faster than the HBM3.

This also means that more GroQ LPUs need to be configured when running a single AI model compared to Nvidia's H200.

Also, according to a Groq employee,GroQ's LLM runs on hundreds of chips.

Regarding this, Yao Jinxin, a chip expert at Tencent Technology, believesGroq's chips are currently no replacement for Nvidia.

In his opinion, speed is Groq's double-edged sword. Groq's architecture is built on small memory and large computing power, so the limited amount of processed content corresponds to extremely high computing power, making it very fast.

On the other hand, Groq's extremely high speed is based on the very limited throughput capacity of a single card. To guarantee the same throughput as the H100, more cards are needed.

He analyzed that for the Groq architecture, there are also application scenarios where it shows its strengths, which is great for many scenarios that require frequent data handling.