① Based on its own chip computing system, Cerebras released what claims to be the fastest AI inference service in the world; ② Cerebras built the memory directly into a huge chip, so it has huge on-chip memory and extremely high memory bandwidth.

“Science and Technology Innovation Board Daily”, August 28 (Editor Zhu Ling) After the market on Wednesday local time, Nvidia is about to release the last major second-quarter report for the entire secondary market. Global investors are highly nervous as a result. Just the day before (August 27, local time), the American artificial intelligence processor chip unicorn Cerebras Systems released what claims to be the fastest AI inference service in the world based on its own chip computing system, claiming to be ten to twenty times faster than the system built using the Nvidia H100 GPU.

Currently, Nvidia GPUs dominate the market in both AI training and inference. Since launching the first AI chip in 2019, Cerebras has focused on selling AI chips and computing systems, and is committed to challenging Nvidia in the field of AI training.

According to a report by the US tech media The Information, OpenAI's revenue is expected to reach 3.4 billion US dollars this year thanks to AI inference services. Since the cake for AI reasoning is so big, Andrew Feldman, co-founder and CEO of Cerebras, said that Cerebras will also have a place in the AI market.

The launch of the AI inference service by Cerebras this time not only launched AI chips and computing systems, but also launched a comprehensive attack on Nvidia based on the second revenue curve based on usage. “Stealing enough market share from Nvidia to make them angry.” That's what Feldman said.

Fast and cheap

Cerebras' AI inference service has shown significant advantages in terms of speed and cost. According to Feldman, measured by the number of tokens that can be output per second, Cerebras' AI inference speed is 20 times faster than AI inference services run by cloud service providers such as Microsoft Azure and Amazon AWS.

Feldman simultaneously launched the AI inference service of Cerebras and Amazon AWS at the press conference. Cerebras can instantly complete inference work and output. The processing speed reached 1,832 tokens per second, while AWS took a few seconds to complete the output, and the processing speed was only 93 tokens per second.

According to Feldman, faster reasoning speed means that real-time interactive voice answers can be achieved, or more accurate and relevant answers can be obtained by calling multiple rounds of results, more external sources, and longer documents, bringing a qualitative leap forward in AI reasoning.

In addition to the speed advantage, Cerebras also has a huge cost advantage. Feldman said that Cerebras' AI inference service is 100 times more cost-effective than AWS, etc. Take the Llama 3.1 70B open source large-scale language model running Meta as an example. The price of this service is only 60 cents per token, while the same service provided by a typical cloud service provider costs $2.90 per token.

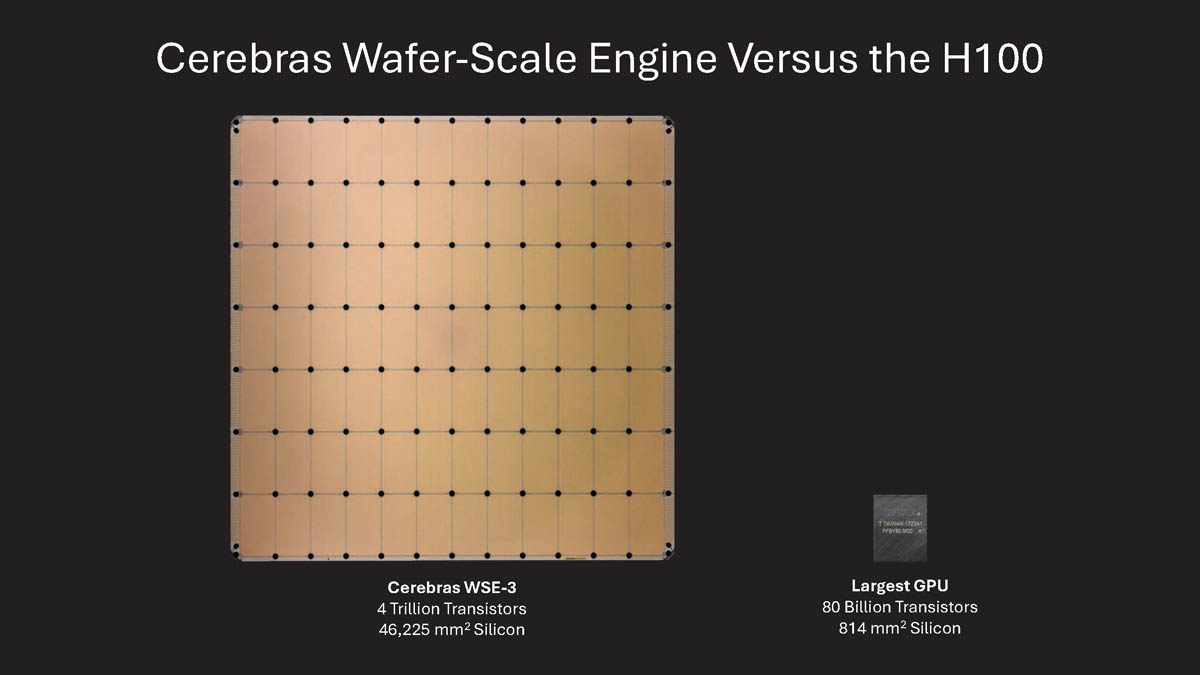

56 times the area of the current largest GPU

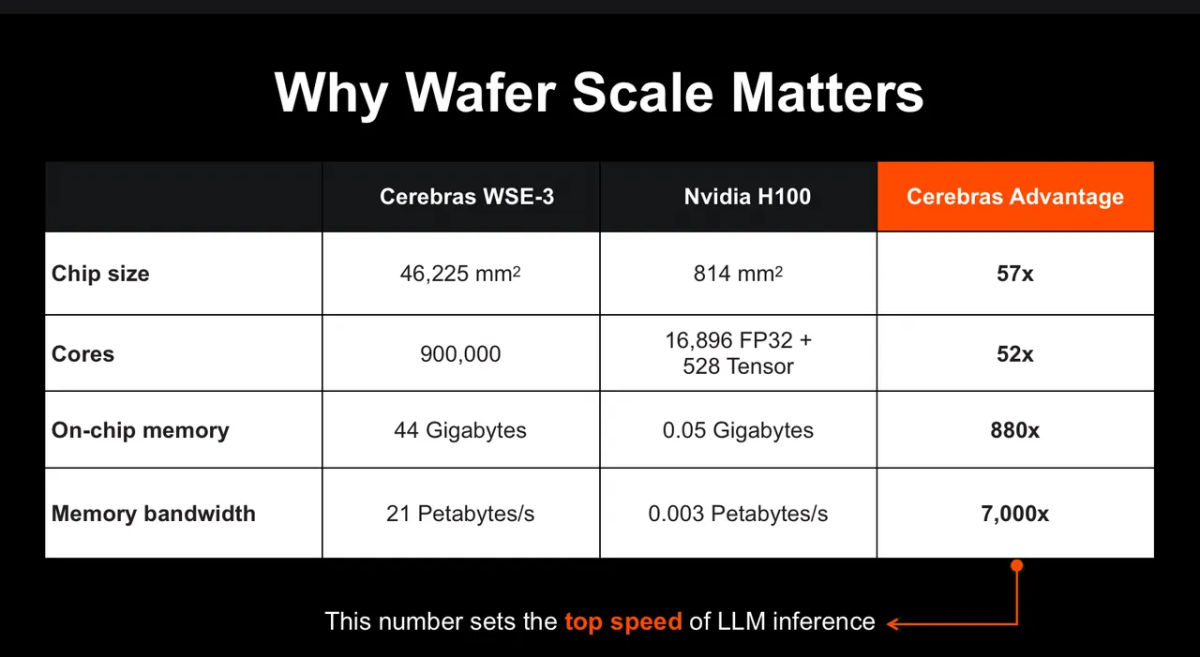

The reason Cerebras' AI inference service is fast and cheap is because of the design of its WSE-3 chip. This is the third-generation processor chip launched by Cerebras in March of this year. It is huge, almost equivalent to the entire surface of a 12-inch semiconductor chip, or larger than a book, with a single unit area of about 462.25 square centimeters. That's 56 times the area of the current largest GPU.

The WSE-3 chip does not use a separate high-bandwidth memory (HBM) that requires an interface connection to be accessed like Nvidia. Instead, it has the memory built directly into the chip.

Thanks to the chip size, the WSE-3's on-chip memory (on-chip memory) is 44G, almost 900 times that of the Nvidia H100, and the memory bandwidth is 7000 times that of the Nvidia H100.

According to Feldman, memory bandwidth is a fundamental factor limiting the inference performance of language models. Cerebras, on the other hand, integrates logic and memory into a giant chip. It has huge on-chip memory and extremely high memory bandwidth, which can quickly process data and generate inference results. “It's a speed a GPU can't reach.”

In addition to its speed and cost advantages, the WSE-3 chip is an all-rounder for AI training and inference, and has excellent performance in handling various AI tasks.

According to the plan, Cerebras will establish AI inference data centers at multiple locations and will charge for inference capabilities based on the number of requests. At the same time, Cerebras will also sell CS-3 computing systems based on WSE-3 to trial cloud service providers.