①新上市的MI325X与MI300X一样,都是基于CDNA 3架构,在HBM3e内存容量等方面进行了升级;②AMD预告称明年发布的MI355X将会迎来显著的架构升级,内存容量进一步提升;③苏姿丰再度放出豪言,预测整个算力芯片市场规模将在2028年达到5000亿美元。

财联社10月11日讯(编辑 史正丞)在AI算力领域,一直活在英伟达阴影里的AMD在周四举办了一场人工智能主题发布会,推出包括MI325X算力芯片在内的一众新品。然而,在市场热度平平的同时,AMD股价也出现了一波明显跳水。

作为最受市场关注的产品,MI325X与此前上市的MI300X一样,都是基于CDNA 3架构,基本设计也类似。所以MI325X更多可以被视为一次中期升级,采用256GB的HBM3e内存,内存带宽最高可达6TB/秒。公司预期这款芯片将从四季度开始生产,并将在明年一季度通过合作的服务器生产商供货。

在AMD的定位中,公司的AI加速器在AI模型创建内容或进行推理的用例中更具有竞争力,而不是通过处理海量数据训练模型。部分原因在于AMD在芯片上堆砌了更多的高带宽内存,使其能够比一些英伟达芯片表现更好。横向比较,英伟达给最新款B200芯片配置了192GB的HBM3e内存,也就是两颗B100各连接4个24GB内存芯片,不过内存带宽倒是能达到8TB/秒。

在AMD的定位中,公司的AI加速器在AI模型创建内容或进行推理的用例中更具有竞争力,而不是通过处理海量数据训练模型。部分原因在于AMD在芯片上堆砌了更多的高带宽内存,使其能够比一些英伟达芯片表现更好。横向比较,英伟达给最新款B200芯片配置了192GB的HBM3e内存,也就是两颗B100各连接4个24GB内存芯片,不过内存带宽倒是能达到8TB/秒。

AMD掌门苏姿丰在发布会上强调:“你们能看到的是,MI325在运行Llama 3.1时,能提供比英伟达H200高出多达40%的性能。”

根据官方文件,与H200相比,具有参数优势的MI325能够提供1.3倍的峰值理论FP16(16位浮点数)和FP8计算性能。

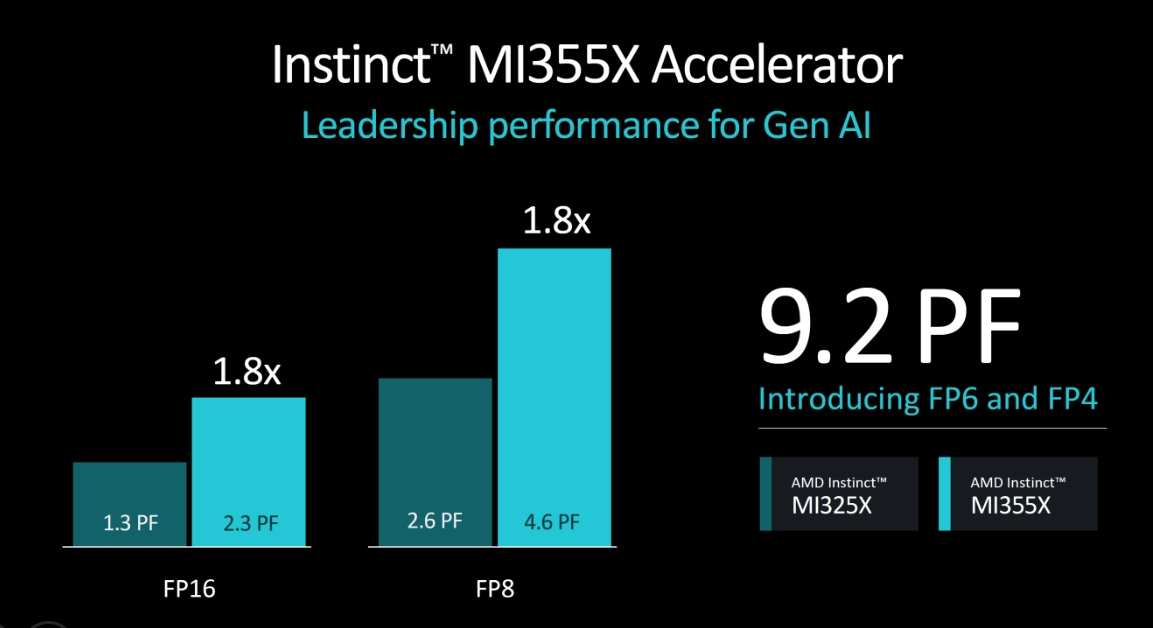

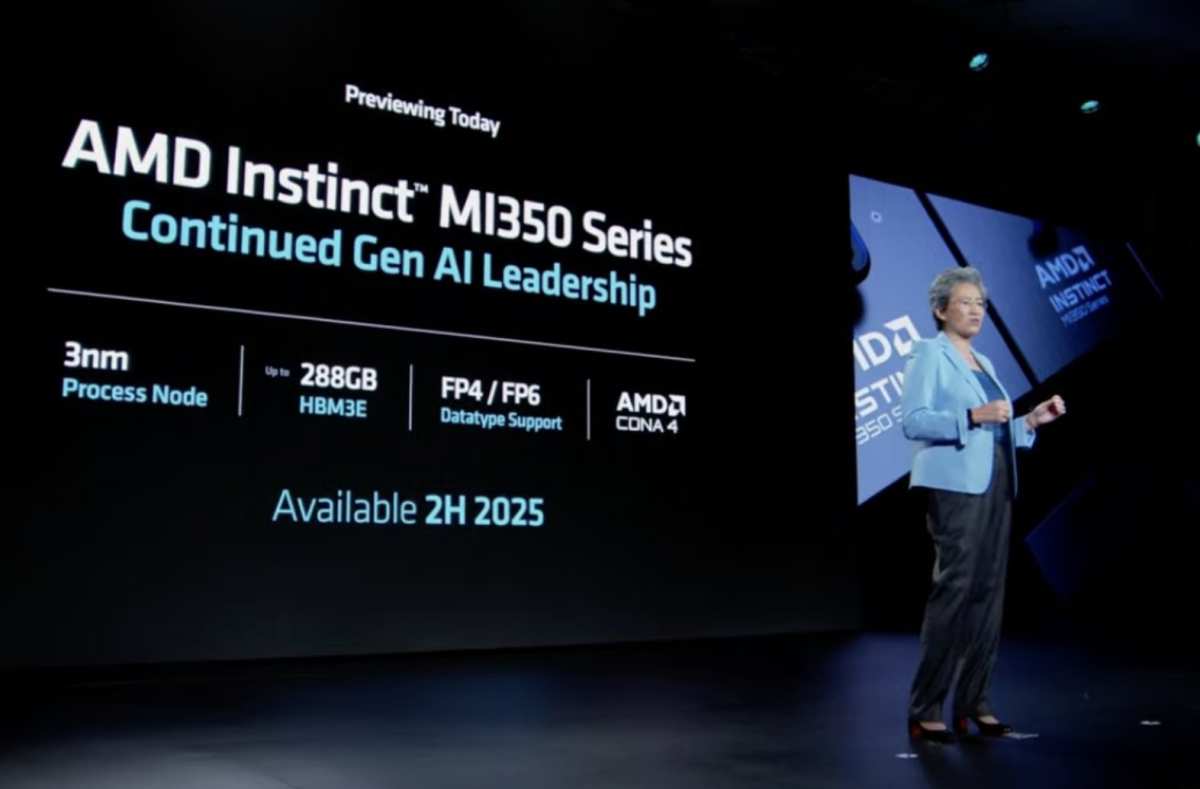

相较于MI325X,AMD也给市场画了一个大大的“饼”——公司将在明年推出CDNA 4架构的MI350系列 GPU,除了HBM3e内存规模进一步升至288GB,以及工艺制程提升至3nm外,性能的提升也非常惊人。例如FP16和FP8性能比起刚发布的MI325高出80%。公司更是表示,与CDNA 3的加速器相比,MI350系列的推理性能将提高35倍。

AMD预期搭载MI355X GPU的平台将在明年下半年上市,与MI325正面迎战英伟达的BlackWell架构产品。

苏姿丰也在周四表示,数据中心人工智能加速器的市场将在2028年增长至5000亿美元,而这个数字在2023年时为450亿美元。在此前的表态中,她曾预期这个市场能够在2027年达到4000亿美元。

值得一提的是,多数行业分析均认为,在AI芯片市场中英伟达的占有率能够达到90%以上,这也是芯片龙头能够享有75%毛利率的原因。基于同样的考量,双方的股价表现差异也很大——今天开完发布会后,AMD(红线)的年内涨幅收窄回了20%以内,而英伟达(绿线)的涨幅接近180%。

(AMD、英伟达股价年内涨幅,来源:TradingView)

顺手“拳打英特尔”

对于AMD而言,目前数据中心业务的大头依然来自于CPU销售。在实际用例中,GPU也需要与CPU搭配在一起才能使用。

在6月季度的财报中,AMD的数据中心销售额同比翻番达到28亿美元,但其中AI芯片只占10亿美元。公司表示,在数据中心CPU市场的占有率约34%,低于英特尔的Xeon系列芯片。

身为数据中心CPU领域的挑战者,AMD也在周四发布第五代EPYC“图灵”系列服务器CPU,规格从8核的9015(527美元)一直到最高192核的9965(14831美元)。AMD强调,EPYC 9965的多项性能表现比英特尔的旗舰服务器CPU Xeon 8592+“强上数倍”。

(苏姿丰展示“图灵”系列服务器CPU,来源:AMD)

在发布会上,AMD请来Meta的基础设施和工程副总裁Kevin Salvadore站台,后者透露公司已经部署超过150万个EPYC CPU。