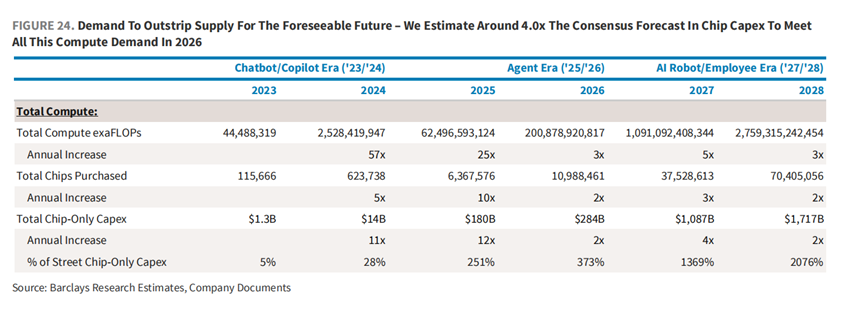

Barclays predicts that with the popularization of AI applications, the demand for inference computing is expected to exceed 70% by 2026. By then, it may require four times more chip capital expenditures than expected to meet all needs.

In the era of AI explosion, where is the next breakthrough? A research report released by Barclays on the 22nd provides an answer. The report puts forward a clear "AI roadmap", outlining the evolution path of future AI technology.

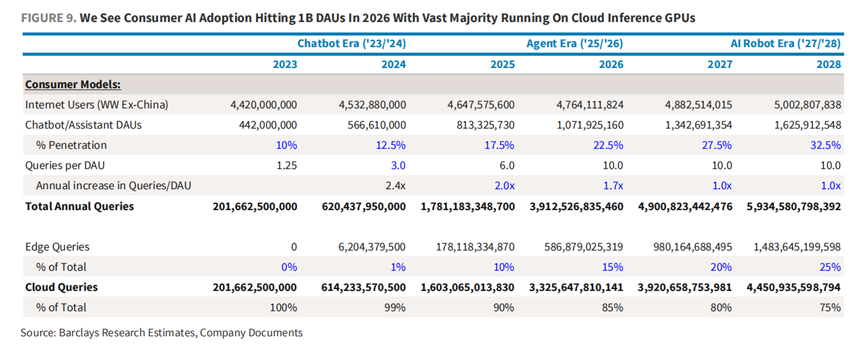

The report points out that the adoption of AI will go through three important stages: first is the current "chatbot/assistant era", followed by the gradual unfolding of the "AI agent era" from 2025 to 2026, and finally entering the "digital employee and robot era" in 2027.

Barclays also predicts that with the popularization of AI applications, the demand for inference computing is expected to exceed 70% by 2026. By then, it may require four times more chip capital expenditures than expected to meet all these needs.

Barclays also predicts that with the popularization of AI applications, the demand for inference computing is expected to exceed 70% by 2026. By then, it may require four times more chip capital expenditures than expected to meet all these needs.

Three major stages clearly defined.

Barclays defines the current stage (2023-2024) as the "chatbot/assistant era", characterized by the widespread use of chatbots (such as ChatGPT, Meta AI, etc.) and some early AI assistants (Copilot).

In this stage, although the performance at the model and infrastructure levels continues to improve, there are still limitations at the application level. Most applications are mainly experimental and have not yet formed wide market adaptability.

"In this initial stage, most investors' returns are allocated to hardware infrastructure providers, similar to the early stages of internet and mobile construction."

The report points out that the current major AI products, such as ChatGPT and Meta AI, have monthly active user numbers exceeding 0.2 billion, but this only accounts for around 10% of the global consumer mobile application market.

Barclays predicts that the 'AI Agent Era' will be ushered in between 2025 and 2026, where the core focus will be on the widespread application of AI agents that can autonomously complete tasks.

Different from chatbots and assistants, AI agents can not only complete complex tasks by multiple request passes but also reduce human direct intervention. Behind this shift is the surge in demand for AI reasoning computing.

Barclays predicts that by 2026, the demand for AI reasoning computing will constitute over 70% of the total computing demand.

"The investment return may move upwards to the application layer (or model and API layer, although as the early innovators' leading position gradually weakens, the risks of commercialization and competition may increase).

Different from the chatbot era, large enterprises may gain very good returns in the agent era as reasoning revenue will surge with adoption.

Lastly, Barclays believes that in 2027 and beyond, AI technology will further enter the 'Digital Employee and Robot Era.'

In enterprise applications, AI agents may evolve into 'Digital Employees' who independently complete tasks, while in the consumer market, intelligent robots will gradually integrate into household life, taking on simple and repetitive daily tasks.

Barclays predicts that by then, the popularization of AI technology will reach the scale of Internet users, surpassing 4 billion people. The investment return of this era should be at the application layer, but "it is difficult to predict today."

Inference computing demand is significantly increasing, with capital expenditure four times higher than the current consensus.

Barclays emphasizes that in the coming years, the demand for inference computing will significantly exceed market expectations, driven by the rise of a new generation of AI products and services.

The report points out that in the three stages of AI development, each stage has higher requirements for inference computing.

In the next few years, computing demand will surpass supply. Barclays estimates that by 2025, the GPU and ASIC chips required for training and inference will be 250% higher than current widespread predictions, increasing 14 times by 2027. The core driving factors for this change are the widespread use of consumer-grade and enterprise-grade AI assistants, as well as the proliferation of higher performance, multimodal AI.

It is worth noting that Barclays predicts that chip capital expenditure in 2026 will need to be four times higher than the current consensus.

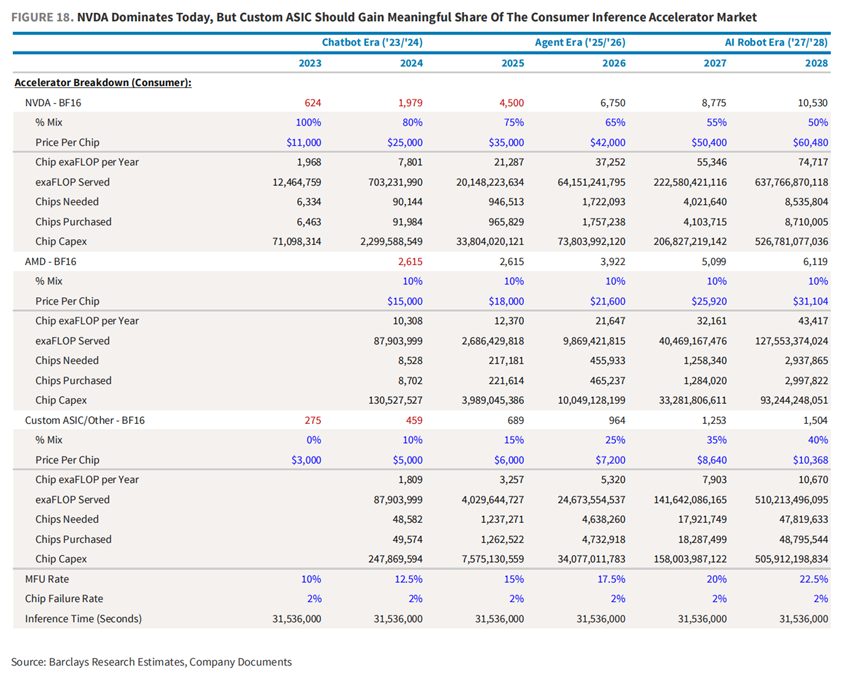

To meet this demand, the report points out that Nvidia's GPU currently holds an 80% share in the inference computing market, but by 2028, this share may drop to 50%, partly due to large cloud computing companies launching their custom ASIC chips to enhance their market share in the inference computing market.

The profit prospects of AI products are promising.

In addition to technical and market analysis, Barclays also conducted a detailed evaluation of the cost-effectiveness of AI products.

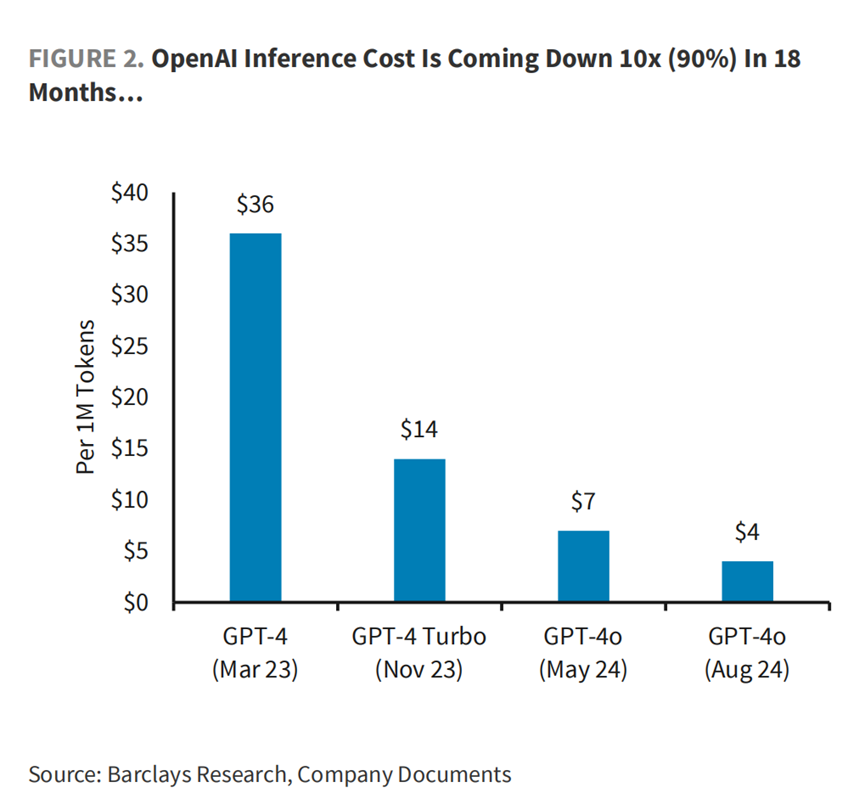

The report points out that the unit cost of inferential computing is rapidly decreasing. Taking OpenAI as an example, Barclays estimates that the company will reduce inference costs by more than 90% within 18 months. In the future, the unit economic benefits of AI products and services will be significantly increased, especially for those products relying on open-source large models.

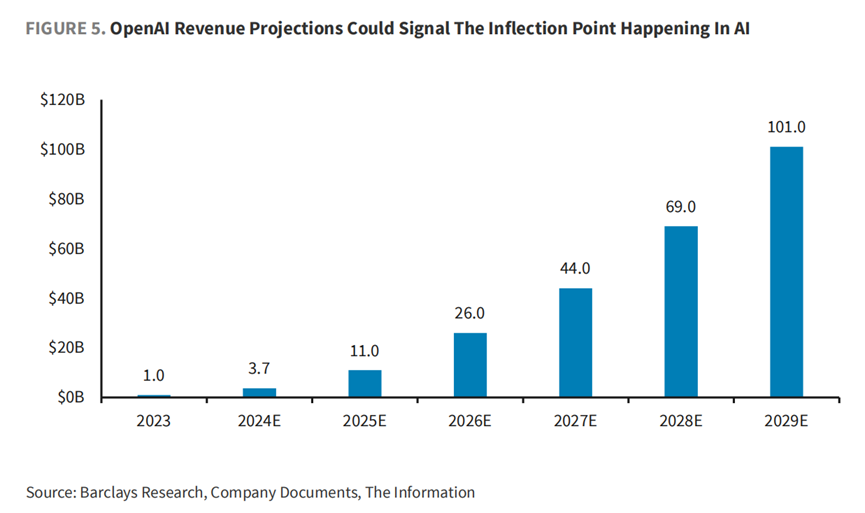

Although AI companies are generally considered to be losing money, OpenAI is actually profitable based on single models. Barclays estimates that OpenAI's GPT-4 model has generated nearly $2 billion in profits over the past two years through high-end subscriptions and API fees for ChatGPT, despite development costs of only $1 to $0.2 billion. In the future, OpenAI's revenue will continue to grow, which may bring about a turning point in AI development.

Looking ahead, Barclays believes that the AI industry is at a crucial turning point. The introduction of AI agents will not only significantly increase the demand for inference computing but also bring new growth opportunities to enterprise and consumer markets. By 2026, the number of daily active users of consumer AI is expected to exceed 1 billion, while the penetration rate of enterprise agents is projected to reach 5%.

Barclays pointed out that another significant feature of this development trend is that future AI products will mainly run in the cloud, with only a small amount processing on local devices (such as mobile phones and PCs). In particular, when AI agents are processing user queries, they often need to pass requests multiple times, which will further drive the increasing demand for cloud-based inference computation.

Editor/Somer

巴克莱还预计,随着AI应用的普及,推理计算的需求预计将在2026年达到70%以上。到那时,

巴克莱还预计,随着AI应用的普及,推理计算的需求预计将在2026年达到70%以上。到那时,