In response to the many new products previously launched by OpenAI, Google on Wednesday launched Gemini 2.0 Flash, an important next-generation artificial intelligence model, which can natively generate images and audio while supporting text generation. 2.0 Flash can also use third-party applications and services to enable it to access features such as Google searches and code execution. According to Google, Gemini 2.0 Flash is the first model in the 2.0 family. It mainly promotes native multi-modal input and output+Agent. It is twice as fast as the 1.5 Pro, and the key performance indicators even surpass the 1.5 Pro.

Author: Zhao Yuhe

Source: Hard AI

In response to the many new products previously launched by OpenAI, Google on Wednesday launched Gemini 2.0 Flash, an important next-generation artificial intelligence model, which can natively generate images and audio while supporting text generation. 2.0 Flash can also use third-party applications and services to enable it to access features such as Google searches and code execution.

In response to the many new products previously launched by OpenAI, Google on Wednesday launched Gemini 2.0 Flash, an important next-generation artificial intelligence model, which can natively generate images and audio while supporting text generation. 2.0 Flash can also use third-party applications and services to enable it to access features such as Google searches and code execution.

Starting Wednesday, an experimental version of 2.0 Flash will be available through Gemini API and Google's AI development platforms (AI Studio and Vertex AI). However, the audio and image generation feature is only open to “early access partners” and is scheduled to be fully rolled out in January next year.

In the next few months, Google said it will launch different versions of 2.0 Flash for products such as Android Studio, Chrome DevTools, Firebase, and Gemini Code Assist.

Flash upgrade

First generation Flash (1.5 Flash) can only generate text and is not designed for particularly demanding workloads. According to Google, the new 2.0 Flash model is more diverse, partly due to its ability to call tools (such as search) and interact with external APIs.

Tulsee Doshi, head of Google's Gemini model product, said

“We know that Flash is loved by developers because of its good balance of speed and performance. In 2.0 Flash, it still maintained its speed advantage, but now it's even more powerful.”

Google claims that according to the company's internal testing, 2.0 Flash ran twice as fast as the Gemini 1.5 Pro model in some benchmarks and had “significant” improvements in areas such as coding and image analysis. In fact, the company said the 2.0 Flash replaced the 1.5 Pro with its better math performance and “factual nature,” becoming Gemini's flagship model.

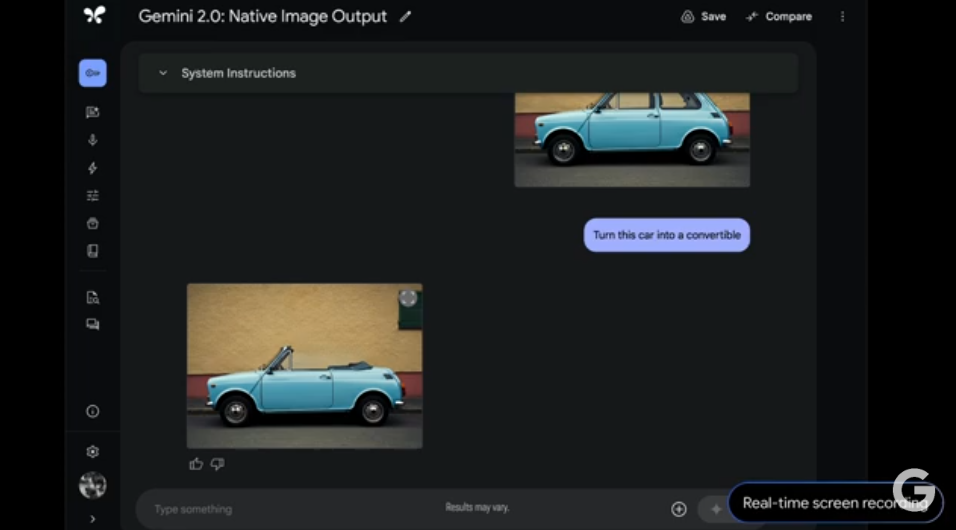

2.0 Flash can generate and modify images while supporting text generation. The model can also read photos, videos, and audio recordings to answer questions related to these contents.

Audio generation is another key feature of 2.0 Flash, which Doshi describes as “manipulable” and “customizable.” For example, the model can read text aloud with eight voices optimized for different accents and languages.

However, Google did not provide an image or audio sample generated by 2.0 Flash, so it is impossible to judge the comparison of its output quality with other models.

Google says it's using its SynthID technology to watermark all audio and images generated by 2.0 Flash. On software and platforms that support SynthID (that is, some Google products), the output of this model will be marked as synthetic content.

The move is aimed at allaying concerns about misuse. In fact, “deepfakes” (deepfakes) are becoming a growing threat. According to data from authentication service Sumsub, the number of deep forgeries detected worldwide increased fourfold from 2023 to 2024.

Multi-modal API

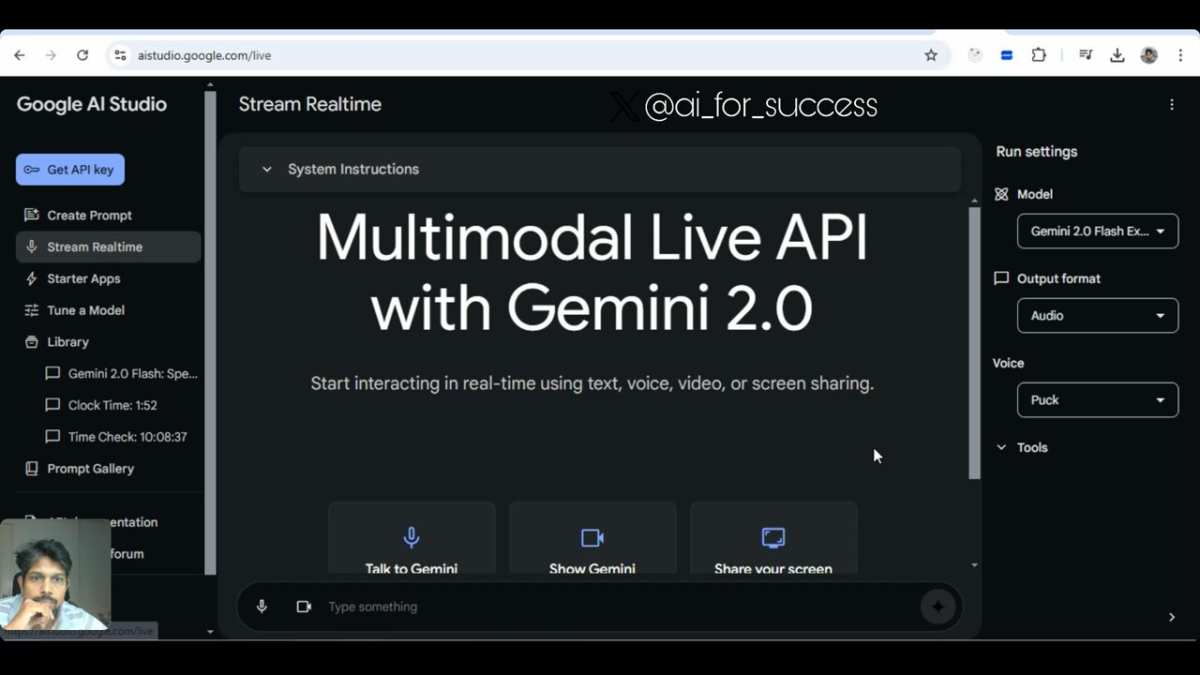

The 2.0 Flash productivity version will launch next January. But at the same time, Google launched an API called the Multimodal Live API to help developers build apps with real-time audio and video streaming capabilities.

Through the Multimodal Live API, Google says developers can create real-time multi-modal applications with audio and video input from a camera or screen. The API supports tool integration to complete tasks and is capable of handling “natural conversation patterns” such as interruptions — similar to OpenAI's real-time API functionality.

The Multimodal Live API was fully available Wednesday morning.

AI agent operation page

On Wednesday, Google also unveiled its first AI agent capable of performing operations on web pages, a research model launched by its DeepMind division called Project Mariner. Powered by Gemini, this generation can take over the user's Chrome browser, move the cursor on the screen, click buttons, and fill out forms, so they can use and browse websites like humans.

Google said that starting Wednesday, the AI agent will first be launched to a small group of pre-selected testers.

According to media reports, Google is continuing to experiment with new ways to allow Gemini to read, summarize, and even use websites. A Google executive told the media that this marks a “new paradigm shift in user experience”: users no longer directly interact with websites, but complete these interactions through generative AI systems.

Analysts believe this shift could affect millions of businesses — from publishers like TechCrunch to retailers like Walmart — which have long relied on Google to direct real users to their websites.

In a presentation with tech media TechCrunch, Google Labs director Jaclyn Konzelmann showed how Project Mariner works.

After installing an extension in the Chrome browser, a chat window will pop up on the right side of the browser. Users can instruct agents to complete tasks such as “create a shopping cart at the supermarket based on this list.”

The AI agent then navigates to a supermarket's website, then searches for and adds items to the virtual shopping cart. One obvious problem is that the agent is slow — there is a delay of about 5 seconds between each cursor movement. Sometimes agents interrupt tasks and return to the chat window to ask for clarification on certain items (such as how many carrots are needed, etc.).

Google's agent can't complete the checkout because it doesn't fill in credit card numbers or billing information. Furthermore, Project Mariner does not accept cookies or sign terms of service agreements for users. Google said this was due to considerations of better control over users and deliberately did not allow agents to perform these operations.

In the background, Google's agent will take a screenshot of the user's browser window (the user must agree to this in the terms of service) and send it to Gemini in the cloud for processing. Gemini will then send instructions to navigate the web back to the user's computer.

Project Mariner can also be used to search for flights and hotels, shop for household items, find recipes, and other tasks that currently require users to click on a web page to complete.

However, Project Mariner only works on the front-end activity tab of the Chrome browser, which means that when the agent is running in the background, users cannot use the computer to do other things, but instead need to watch Gemini slowly click and operate. Koray Kavukcuoglu, chief technology officer at Google DeepMind, said it was a very intentional decision to let users know what Google's AI agents are doing.

Konzelmann said,

“[Project Mariner] marks a radical new user experience paradigm shift we're seeing now. We need to explore the right way to make all of this change the way users interact with the web, and also change the way publishers create experiences for users and agents.”

AI agents do research, write code, and get familiar with games

In addition to Project Mariner, Google on Wednesday also unveiled several new AI agents dedicated to specific tasks.

One AI agent, Deep Research, aims to help users research complex research by creating multi-step research programs. It appears to be a competitor to OpenAI's O1, which is also capable of multi-step reasoning. However, a Google spokesperson stated that the agent was not used to solve mathematical and logical reasoning problems, write code, or perform data analysis. Deep Research is now available in Gemini Advanced and will launch the Gemini app in 2025.

When a difficult or large-scale question is received, Deep Research creates a multi-step action plan to answer the question. After users approve the plan, Deep Research takes a few minutes to answer questions, search the web, and generate a detailed research report.

Another new AI agent, Jules, aims to help developers complete code tasks. It integrates directly into GitHub workflows, enabling Jules to review existing work and make changes directly in GitHub. Jules is now available to a small group of testers and will be released later in 2025.

Finally, Google DeepMind said it is developing an AI agent to help users familiarize themselves with games, based on its long experience in creating game AI. Google is teaming up with game developers like Supercell to test Gemini's ability to explain game worlds like Clash of Clans.

AI-generated summary

On Wednesday, Google also released “AI Overviews,” an AI-generated summary function based on the Gemini 2.0 model. The summary content provided for certain Google search queries will soon be able to handle “more complex topics”, as well as “multi-modal” and “multi-step” search content. Google says this includes advanced math problems and programming questions.

The new AI Reviews feature will begin limited testing this week and will be rolled out widely early next year.

However, since its launch this spring, AI Reviews has sparked a lot of controversy, and some of the questionable statements and suggestions it provided (such as recommending gluing pizza) have sparked a buzz online. According to a recent report from SEO platform SE Ranking, AI Reviews cites websites that are “not completely reliable or evidence-based”, including outdated research and paid product listings.

Analysts believe the main problem is that AI Overviews sometimes makes it difficult to discern whether the source of information is fact, fiction, irony, or serious content. Over the past few months, Google has changed the way AI Overviews works, limiting answers related to current events and health topics. But Google doesn't claim that this feature is perfect.

Despite this, Google said AI Overviews increased search engagement, particularly among the key user group of 18 to 24 year olds — a key target group for Google.

The latest AI accelerator chip, Trillium, is exclusive to Gemini 2.0

Google unveiled its sixth-generation AI accelerator chip Trillium on Wednesday, claiming that the chip's performance improvements may fundamentally change the economic model for AI development.

This custom processor is used to train Google's latest Gemini 2.0 AI model. Its training performance is four times that of the previous generation, while drastically reducing energy consumption.

Google CEO Sundar Pichai (Sundar Pichai) explained in an announcement article that Google has connected more than 0.1 million Trillium chips into a single network structure, forming one of the world's most powerful AI supercomputers.

Trillium has made significant progress on multiple dimensions. Compared with its predecessor, the peak computing performance of each chip of this chip has been increased 4.7 times, while the high-bandwidth memory capacity and inter-chip interconnection bandwidth have doubled. More importantly, its energy efficiency has increased by 67%, which is a key indicator for data centers when dealing with the huge energy demand for AI training.

Trillium's commercial impact isn't limited to performance metrics. Google claims that compared to the previous generation chip, the chip has improved training performance by 2.5 times per dollar, which may reshape the economic model for AI development.

Analysts believe that the release of Trillium has intensified competition in the field of AI hardware, while Nvidia has long dominated with its GPU-based solutions. While Nvidia's chips are still the industry standard for many AI applications, Google's custom chip approach may have advantages for specific workloads, particularly for training very large models.

Other analysts say that Google's huge investment in custom chip development reflects its strategic bet on the importance of AI infrastructure. Google's decision to offer Trillium to cloud customers shows that it wants to be more competitive in the cloud AI market and compete fiercely with Microsoft Azure and Amazon AWS. For the tech industry as a whole, the release of Trillium shows that the battle for AI hardware supremacy is entering a new phase.

为应对OpenAI此前推出的众多新产品,谷歌周三推出下一代重要人工智能模型Gemini 2.0 Flash,可以原生生成图像和音频,同时支持文本生成。2.0 Flash还可以使用第三方应用程序和服务,使其能够访问谷歌搜索、执行代码等功能。

为应对OpenAI此前推出的众多新产品,谷歌周三推出下一代重要人工智能模型Gemini 2.0 Flash,可以原生生成图像和音频,同时支持文本生成。2.0 Flash还可以使用第三方应用程序和服务,使其能够访问谷歌搜索、执行代码等功能。