Arbitrage platform Lenard Esau Baum's Baum-Welch (Baum-Welch algorithm model)

In electrical engineering, computer science, statistical computation, and bioinformatics, the Baum—Welch algorithm is the maximum expected algorithm for finding unknown parameters of hidden Markov models. It uses forward-backward algorithms to calculate E-Step statistics.

The maximum expectation algorithm (expectation-maximization algorithm, also translated as expectation maximization algorithm) is used in statistics to find the maximum likelihood estimation of parameters in probabilistic models with unobservable implicit variables.

In statistical computation, the maximum expectation (EM) algorithm is an algorithm for finding maximum likelihood estimates or maximum posterior estimates of parameters in probability models, where probabilistic models rely on implicit variables that cannot be observed. Maximum expectations algorithms are often used in the field of data clustering (Data Clustering) in machine learning and computer vision. The maximum expectation algorithm is calculated through two alternating steps. The first step is to calculate the expectation (E) and calculate the maximum likelihood estimate using the existing estimate of the hidden variable; the second step is to maximize (M) to calculate the value of the parameter by maximizing the maximum probable value obtained in the E step. The estimated values of the parameters found in the M step are used in the next E step calculation, and this process continues to alternate.

The maximum expected value algorithm was proposed by Arthur P Dempster, Nan Laird, and Donald Rubin in their classic paper published in 1977. They point out that this method has actually been “proposed many times in their specific field of research” by many authors before.

The EM algorithm is used to find the (local) maximum likelihood parameter of a statistical model when the equation cannot be directly solved. Typical of these models are models with latent variables, unknown parameters, and known observational data. In other words, either there are missing values in the data, or the model can be represented more simply by assuming that there are more unobserved data points. Using the Mixture Model (Mixture Model) as an example, the mixed model can be described more simply by assuming that each observed data point has a corresponding unobserved data point, or latent variable, to specify the mixed part to which each data point belongs.

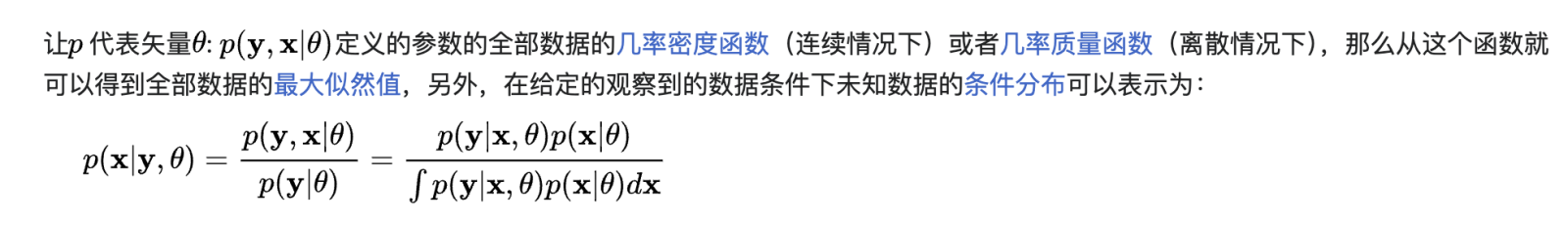

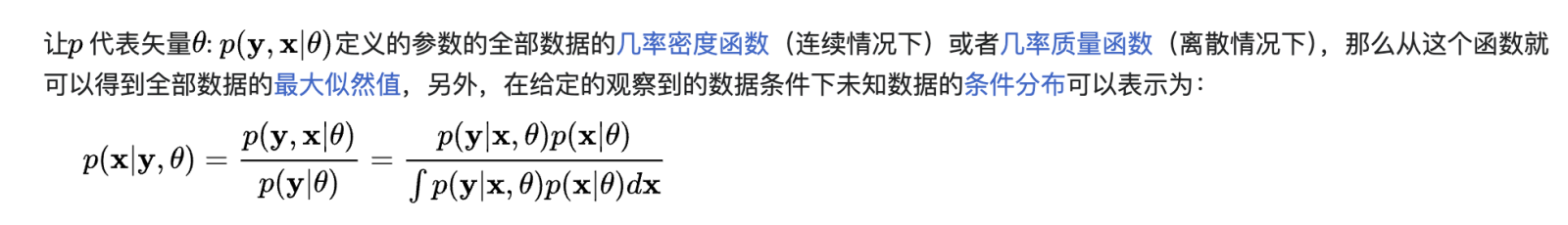

Estimate unobservable data

The Baum—Welch algorithm is named after its inventors Leonard Eshaw Baum and Lloyd Richard Welch. The Baum—Welch algorithm and hidden Markov model were first described in a series of articles at the Defense Analysis Institute in the late 1960s and early 1970s by Baum and his colleagues. HMMS was initially mainly used in the field of voice processing. In the 1980s, hMMS began to be a useful tool for analyzing biological systems and information, particularly genetic information. Since then, they have become an important tool for probabilistic modeling of genomic sequences.

Forward process:

Backward process:

Update:

Let's say we have a chicken that lays eggs, and we go pick eggs every day at noon. Whether a chicken lays eggs depends on unknown implicit states. Here we simply assume that only two implicit states will determine whether it lays eggs. We don't know the initial values of these implicit states, the probability of transformation between them, and the probability that chickens will lay eggs in each state. We randomly initialized them to start guessing.

Let's say our observed sequence is (E=eggs, N=no eggs): N, N, N, N, N, E, E, N, N, N.

In this way, we also obtained a shift in the observed state: NN, NN, NN, NN, NE, EE, EN, NN, NN.

Use the information above to re-estimate the state transition matrix.

To estimate the probability of the initial state, we assume that the starting states of the sequence are S1 and S2 respectively, then calculate the maximum probability, and then normalize the probability of updating the initial state. Repeat the steps above until convergence is reached.

Disclaimer: Community is offered by Moomoo Technologies Inc. and is for educational purposes only.

Read more

Comment

Sign in to post a comment